Nvidia Jetson Nano Review

Introduction

NVIDIA announced the Jetson Nano Developer Kit at the 2019 NVIDIA GPU Technology Conference (GTC), a $99 [USD] computer available now for embedded designers, researchers, and DIY makers, delivering the power of modern AI in a compact, easy-to-use platform with full software programmability. NVIDIA® Jetson Nano™ Developer Kit is a small, powerful computer that lets you run multiple neural networks in parallel for applications like image classification, object detection, segmentation, and speech processing. All in an easy-to-use platform that runs in as little as 5 watts. Nvidia Jetson Nano is the Successor / Little Brother of the expensive Jetson TX1 at $499 [USD].

| Platform | CPU | GPU | Memory | Storage | MRP |

|---|---|---|---|---|---|

| Jetson TX1 (Tegra X1) | 4x ARM Cortex A57 @ 1.73 GHz | 256x Maxwell @ 998 MHz (1 TFLOP) | 4GB LPDDR4 (25.6 GB/s) | 16 GB eMMC | $499 |

| Jetson Nano | 4x ARM Cortex A57 @ 1.43 GHz | 128x Maxwell @ 921 MHz (472 GFLOPS) | 4GB LPDDR4 (25.6 GB/s) | Micro SD | $99 |

Jetson Nano has nearly Half the GPU Computation Power [ 472 GLOPS / 1 TFLOPS = 0.472 ] in Just 1/5th the Price of Jetson TX1. Per Dollar you get 4.7676 GFLOPS in Nvidia Nano vs 2.0040 GFLOPS in Jetson TX1. Sounds Awesome Right!! I will come to the Weaknesses also Keep Reading the Blog.

Jetson Nano vs Jetson TX1, TX2 and AGX Xavier

| Features | Jetson Nano | Jetson TX1 | Jetson TX2 / TX2i | Jetson AGX Xavier |

|---|---|---|---|---|

| CPU | ARM Cortex-A57 (quad-core) @ 1.43GHz | ARM Cortex-A57 (quad-core) @ 1.73GHz | ARM Cortex-A57 (quad-core) @ 2GHz +NVIDIA Denver2 (dual-core) @ 2GHz | NVIDIA Carmel ARMv8.2 (octal-core) @ 2.26GHz(4x2MB L2 + 4MB L3) |

| GPU | 128-core NVIDIA Maxwell @ 921MHz | 256-core NVIDIA Maxwell @ 998MHz | 256-core NVIDIA Pascal @ 1300MHz | 512-core Volta @ 1377 MHz + 64 Tensor Cores |

| DL | NVIDIA GPU support (CUDA, cuDNN, TensorRT) | NVIDIA GPU support (CUDA, cuDNN, TensorRT) | NVIDIA GPU support (CUDA, cuDNN, TensorRT) | Dual NVIDIA Deep Learning Accelerators |

| Memory | 4GB 64-bit LPDDR4 @ 1600MHz | 25.6 GB/s | 4GB 64-bit LPDDR4 @ 1600MHz | 25.6 GB/s | 8GB 128-bit LPDDR4 @ 1866Mhz | 58.3 GB/s | 16GB 256-bit LPDDR4x @ 2133MHz | 137GB/s |

| Storage | MicroSD card | 16GB eMMC 5.1 | 32GB eMMC 5.1 | 32GB eMMC 5.1 |

| Vision | NVIDIA GPU support (CUDA, VisionWorks, OpenCV) | NVIDIA GPU support (CUDA, VisionWorks, OpenCV) | NVIDIA GPU support (CUDA, VisionWorks, OpenCV) | 7-way VLIW Vision Accelerator |

| Encoder | (1x) 4Kp30, (2x) 1080p60, (4x) 1080p30 | (1x) 4Kp30, (2x) 1080p60, (4x) 1080p30 | 4Kp60, (3x) 4Kp30, (4x) 1080p60, (8x) 1080p30 | (4x) 4Kp60, (8x) 4Kp30, (32x) 1080p30 |

| Decoder | 4Kp60, (2x) 4Kp30, (4x) 1080p60, (8x) 1080p30 | 4Kp60, (2x) 4Kp30, (4x) 1080p60, (8x) 1080p30 | (2x) 4Kp60, (4x) 4Kp30, (7x) 1080p60 | (2x) 8Kp30, (6x) 4Kp60, (12x) 4Kp30 |

| Camera | 12 lanes MIPI CSI-2 | 1.5 Gbps per lane | 12 lanes MIPI CSI-2 | 1.5 Gbps per lane | 12 lanes MIPI CSI-2 | 2.5 Gbps per lane | 16 lanes MIPI CSI-2 | 6.8125Gbps per lane |

| Display | 2x HDMI 2.0 / DP 1.2 / eDP 1.2 | 2x MIPI DSI | 2x HDMI 2.0 / DP 1.2 / eDP 1.2 | 2x MIPI DSI | 2x HDMI 2.0 / DP 1.2 / eDP 1.2 | 2x MIPI DSI | (3x) eDP 1.4 / DP 1.2 / HDMI 2.0 @ 4Kp60 |

| Wireless | M.2 Key-E site on carrier | 802.11a/b/g/n/ac 2×2 867Mbps | Bluetooth 4.0 | 802.11a/b/g/n/ac 2×2 867Mbps | Bluetooth 4.1 | M.2 Key-E site on carrier |

| Ethernet | 10/100/1000 BASE-T Ethernet | 10/100/1000 BASE-T Ethernet | 10/100/1000 BASE-T Ethernet | 10/100/1000 BASE-T Ethernet |

| USB | (4x) USB 3.0 + Micro-USB 2.0 | USB 3.0 + USB 2.0 | USB 3.0 + USB 2.0 | (3x) USB 3.1 + (4x) USB 2.0 |

| PCIe | PCIe Gen 2 x1/x2/x4 | PCIe Gen 2 x5 | 1×4 + 1×1 | PCIe Gen 2 x5 | 1×4 + 1×1 or 2×1 + 1×2 | PCIe Gen 4 x16 | 1×8 + 1×4 + 1×2 + 2×1 |

| CAN | NA | NA | Dual CAN bus controller | Dual CAN bus controller |

| Misc IO | UART, SPI, I2C, I2S, GPIOs | UART, SPI, I2C, I2S, GPIOs | UART, SPI, I2C, I2S, GPIOs | UART, SPI, I2C, I2S, GPIOs |

| Socket | 260-pin edge connector, 45x70mm | 400-pin board-to-board connector, 50x87mm | 400-pin board-to-board connector, 50x87mm | 699-pin board-to-board connector, 100x87mm |

| Thermals | -25°C to 80°C | -25°C to 80°C | -25°C to 80°C | -25°C to 80°C |

| Power | 5/10W | 10W | 7.5W | 10/15/30W |

| Perf | 472 GFLOPS | 1 TFLOPS | 1.3 TFLOPS | 32 TeraOPS |

Purchase and Unboxing

We bought it from the Nvidia India Website at a Price of Rs.8,899.00 and it arrived in few days. There was no Problem in Shipping or Ordering.

You don’t get much in the box for this Price point.

- 80x100mm Reference Carrier Board, complete devkit with Module and Heatsink weighs 138 grams

- Jetson Nano Module with a passive heatsink [ Upgradable to Active Cooling ]

- Card Board Pop-Up Stand

- Getting Started Paper Guide

- No SD-Card, No Power Input Selection Jumper

Nvidia Jetson Nano Review Box :: Front View

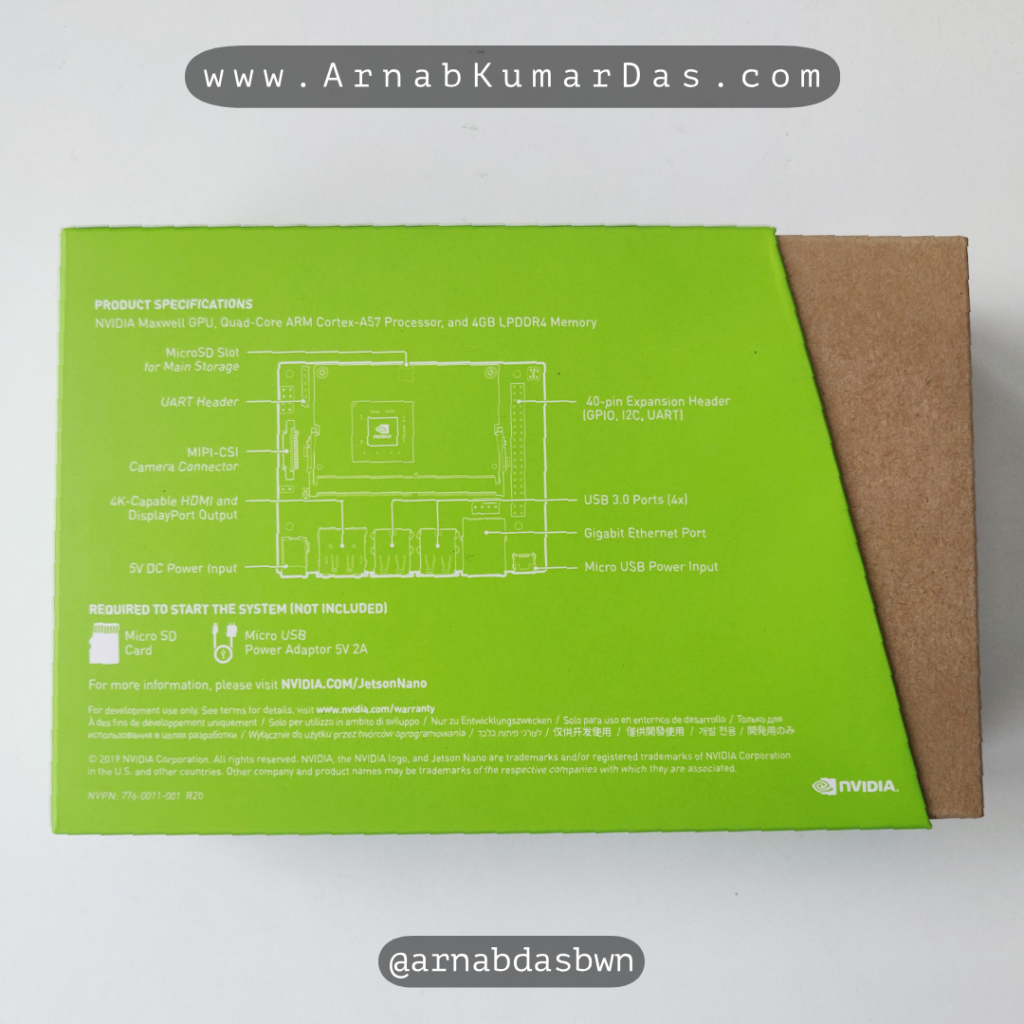

Nvidia Jetson Nano Review Box :: Rear View

Unboxing Nvidia Jetson Nano

Nvidia Jetson Nano Unboxed

The Jetson Nano Comes in a nicely designed cardboard box packaging it is very simplistic and aesthetically designed. The Dev Kit Comes in a Sealed Matt Finish Soft Black Static Safe Bag.

What You Will Need Extra

- Power Supply

- 5V⎓2A Micro-USB adapter ( Adafruit GEO151UB ) [ I Used One-Plus 6 Charger ]

- 5V⎓4A DC Barrel Jack adapter, 5.5mm OD x 2.1mm ID x 9.5mm Length, Center-Positive ( Adafruit 1446 ) [ I Used a Belkin 5V⎓4A ]

- MicroSD card ( 16GB UHS-1 Minumum ) [ OS will Occupy ~12.5GB ]

- A Full HD 1080p or Above Monitor with HDMI or DP Input

- A Computer to Flash the SD Card

Ports on the NVIDIA® Jetson Nano™ Developer Kit

MIPI CSI-2 Camera Interface Nvidia Jetson Nano

Ports of Nvidia Jetson Nano

Side View of Nvidia Jetson Nano

Micro SD Card Slot Nvidia Jetson Nano

Ports on Jetson Nano [ Credit: Nvidia ]

- microSD Card Slot for Main Storage

- 40-pin Expansion Header

- Micro-USB port for 5V⎓2A power input or for data

- Gigabit Ethernet Port

- USB 3.0 ports (x4)

- HDMI Output Port

- DisplayPort Connector

- DC Barrel Jack for 5V⎓4A Power Input

- MIPI CSI camera connector [ Supports Raspberry Pi CSI Camera ] ( Good Strategy by Nvidia to capture Market )

GPIO Nvidia Jetson Nano J41 Header

Nvidia has Intelligently kept the GPIO same as Raspberry Pi 3 B+ as this will help them to capture the market easily by supporting most of the Raspberry Pi Hats and Accessories Out of the Box. They also have placed the GPIO Header J41 is such a way that all the Hats protrude outwards as there is no Space to accommodate Hats on the PCB Side, unlike Raspberry Pi.

| Sysfs GPIO | Name | Pin | Pin | Name | Sysfs GPIO |

|---|---|---|---|---|---|

| 3.3 VDC Power | 1 | 2 | 5.0 VDC Power | ||

| I2C_2_SDA I2C Bus 1 | 3 | 4 | 5.0 VDC Power | ||

| I2C_2_SCL I2C Bus 1 | 5 | 6 | GND | ||

| gpio216 | AUDIO_MCLK | 7 | 8 | UART_2_TX /dev/ttyTHS1 | |

| GND | 9 | 10 | UART_2_RX /dev/ttyTHS1 | ||

| gpio50 | UART_2_RTS | 11 | 12 | I2S_4_SCLK | gpio79 |

| gpio14 | SPI_2_SCK | 13 | 14 | GND | |

| gpio194 | LCD_TE | 15 | 16 | SPI_2_CS1 | gpio232 |

| 3.3 VDC Power | 17 | 18 | SPI_2_CS0 | gpio15 | |

| gpio16 | SPI_1_MOSI | 19 | 20 | GND | |

| gpio17 | SPI_1_MISO | 21 | 22 | SPI_2_MISO | gpio13 |

| gpio18 | SPI_1_SCK | 23 | 24 | SPI_1_CS0 | gpio19 |

| GND | 25 | 26 | SPI_1_CS1 | gpio20 | |

| I2C_1_SDA I2C Bus 0 | 27 | 28 | I2C_1_SCL I2C Bus 0 | ||

| gpio149 | CAM_AF_EN | 29 | 30 | GND | |

| gpio200 | GPIO_PZ0 | 31 | 32 | LCD_BL_PWM | gpio168 |

| gpio38 | GPIO_PE6 | 33 | 34 | GND | |

| gpio76 | I2S_4_LRCK | 35 | 36 | UART_2_CTS | gpio51 |

| gpio12 | SPI_2_MOSI | 37 | 38 | I2S_4_SDIN | gpio77 |

| GND | 39 | 40 | I2S_4_SDOUT | gpio78 |

Nvidia Jetson Nano Benchmarks

Test Setup

- Kingstone 32GB Micro-SD HC-I U1

- Belkin 5V⎓4A AC Power Adapter

- 150Mbps Ethernet Internet Connection

- Active Air Cooling

- Logitech Wireless USB Mouse and Keyboard Combo

- HDMI Connection to 1080p Monitor

Phoronix Test Suite :

So I Started with Some of the Standard Test of Phoronix Test Suite. You can also run the same test by executing the below commands.

sudo apt-get install -y php-cli php-xml # Download PTS https://www.phoronix-test-suite.com/ and Install phoronix-test-suite benchmark 1809111-RA-ARMLINUX005 # Accept for Dependency Installation and Wait a few hours, Test Result is available at : ~/.phoronix-test-suite/test-results/

| Test | Pi Zero | Pi 3 B | Nano | TX1 | Notes |

|---|---|---|---|---|---|

| Tinymembench (memcpy) | 291 | 1297 | 3501 | 3862 | |

| TTSIOD 3D Renderer | 15.66 | 41.00 | 45.05 | ||

| 7-Zip Compression | 205 | 1863 | 3501 | 4526 | |

| C-Ray | 2357 | 932 | 851 | Seconds (lower is better) | |

| Primesieve | 1543 | 468 | 401 | Seconds (lower is better) | |

| AOBench | 333 | 187 | 165 | Seconds (lower is better) | |

| FLAC Audio Encoding | 971.18 | 387.09 | 104.01 | 78.86 | Seconds (lower is better) |

| LAME MP3 Encoding | 780 | 352.66 | 144.21 | 113.14 | Seconds (lower is better) |

| Perl (Pod2html) | 5.3830 | 1.2945 | 0.7114 | 0.6007 | Seconds (lower is better) |

| PostgreSQL (Read Only) | 6640 | 12450 | 16079 | ||

| Redis (GET) | 34567 | 213067 | 568431 | 484688 | |

| PyBench | 24349 | 7080 | 6348 | ms (lower is better) | |

| Scikit-Learn | 844 | 489 | 434 | Seconds (lower is better) |

64-Bit Whetstone CPU Floating-Point Arithmetic

The Whetstone benchmark is a synthetic benchmark for evaluating the performance of computers. It was first written in Algol 60 in 1972 at TSU ( The Technical Support Unit of the Department of Trade and Industry – later part of the Central Computer and Telecommunications Agency or CCTA in the United Kingdom ).

I used the Code written by Roy Longbottom. He originally wrote the Code for 64-Bit Raspberry Pi ARM-v8 which is the same architecture as of Jetson Nano. I did a Local GCC build. You can download the code below:

There was no significant difference in MWIPS [ Million Whetstones Instructions Per Second ] Nvidia Nano is just 111MWIPS Faster than Raspberry Pi 3 B+.

cd to source directory gcc whets.c cpuidc.c -lm -lrt -O3 -o whetstonePi64

Whetstone Results on Nvidia Nano

############################################## Whetstone Single Precision C Benchmark armv8 64 Bit, Sun May 12 14:31:50 2019 Loop content Result MFLOPS MOPS Seconds N1 floating point -1.12475013732910156 329.588 0.070 N2 floating point -1.12274742126464844 306.781 0.524 N3 if then else 1.00000000000000000 4246.247 0.029 1.00000000000000000 N4 fixed point 12.00000000000000000 1415.917 0.266 12.00000000000000000 N5 sin,cos etc. 0.49911010265350342 28.007 3.556 0.49911010265350342 N6 floating point 0.99999982118606567 230.691 2.799 N7 assignments 3.00000000000000000 944.467 0.234 3.00000000000000000 N8 exp,sqrt etc. 0.75110864639282227 17.620 2.527 0.75110864639282227 MWIPS 1196.315 10.006 From File /proc/version Linux version 4.9.140-tegra (buildbrain@mobile-u64-3531) (gcc version 7.3.1 20180425 [linaro-7.3-2018.05 revision d29120a424ecfbc167ef90065c0eeb7f91977701] (Linaro GCC 7.3-2018.05) ) #1 SMP PREEMPT Wed Mar 13 00:32:22 PDT 2019

Whetstone Results on Raspberry Pi 3 B+

############################################## Whetstone Single Precision C Benchmark armv8 64 Bit, Sat May 25 19:33:45 2019 Loop content Result MFLOPS MOPS Seconds N1 floating point -1.12475013732910156 390.682 0.053 N2 floating point -1.12274742126464844 421.525 0.346 N3 if then else 1.00000000000000000 156858576.000 0.000 N4 fixed point 12.00000000000000000 1738.984 0.196 N5 sin,cos etc. 0.49911010265350342 21.011 4.292 N6 floating point 0.99999982118606567 348.137 1.680 N7 assignments 3.00000000000000000 1391.264 0.144 N8 exp,sqrt etc. 0.75110864639282227 12.314 3.275 MWIPS 1085.542 9.986 From File /proc/version Linux version 4.14.79-v7+ (dc4@dc4-XPS13-9333) (gcc version 4.9.3 (crosstool-NG crosstool-ng-1.22.0-88-g8460611)) #1159 SMP Sun Nov 4 17:50:20 GMT 2018

LINPACK Double Precision

The LINPACK Benchmarks are a measure of a system’s floating point computing power. Introduced by Jack Dongarra, they measure how fast a computer solves a dense n by n system of linear equations Ax = b, which is a common task in engineering. The performance measured by the LINPACK benchmark consists of the number of 64-bit floating-point operations, generally additions and multiplications, a computer can perform per second, also known as FLOPS. However, a computer’s performance when running actual applications is likely to be far behind the maximal performance it achieves running the appropriate LINPACK benchmark.

Compared to Raspberry Pi 3 B+ we have 4.2 Times LINPACK FLOPS Performance.

LINPACK Results on Nvidia Nano

######################################################## Linpack Double Precision Unrolled Benchmark n @ 100 Optimisation armv8 64 Bit, Sun May 12 14:37:08 2019 Speed 912.26 MFLOPS ######################################################## From File /proc/version Linux version 4.9.140-tegra (buildbrain@mobile-u64-3531) (gcc version 7.3.1 20180425 [linaro-7.3-2018.05 revision d29120a424ecfbc167ef90065c0eeb7f91977701] (Linaro GCC 7.3-2018.05) ) #1 SMP PREEMPT Wed Mar 13 00:32:22 PDT 2019

LINPACK Results on Raspberry Pi 3 B+

######################################################## Linpack Double Precision Unrolled Benchmark n @ 100 Optimisation armv8 64 Bit, Sat May 25 19:43:16 2019 Speed 216.60 MFLOPS ######################################################## From File /proc/version Linux version 4.14.79-v7+ (dc4@dc4-XPS13-9333) (gcc version 4.9.3 (crosstool-NG crosstool-ng-1.22.0-88-g8460611)) #1159 SMP Sun Nov 4 17:50:20 GMT 2018

Bus Speed Benchmark

This benchmark is designed to identify reading data in bursts over buses. The program starts by reading a word (4 bytes) with an address increment of 32 words (128 bytes) before reading another word. The increment is reduced by half on successive tests, until all data is read.

Maximum MB/second data transfer speed is calculated as bus clock MHz x 2 for Double Data Rate (DDR) x bus width (at this time 4 bytes ARM, 8 bytes Intel) x number of memory channels. However, some of these specifications can be misleading and maximum speed options might not be provided on a particular platform. Where the maximum is not provided, there can be confusion as to whether specified MHz is raw bus clock speed or included DDR consideration.

Compared to Raspberry Pi 3 B+ we have nearly double the Bus Speed.

Bus Speed Results on Nvidia Jetson Nano

#####################################################

BusSpeed armv8 64 Bit Sun May 12 14:39:49 2019

Reading Speed 4 Byte Words in MBytes/Second

Memory Inc32 Inc16 Inc8 Inc4 Inc2 Read

KBytes Words Words Words Words Words All

16 831 1118 2043 3333 4882 7105

32 1531 1848 2827 4088 5144 7533

64 584 778 1598 2890 4590 6672

128 624 837 1594 2837 4454 6612

256 609 839 1623 2875 4555 6618

512 613 846 1630 2801 4314 6619

1024 615 842 1612 2701 4496 6526

4096 188 195 594 1111 2007 3857

16384 163 172 549 1117 2025 3931

65536 164 172 557 1107 2006 4019

End of test Sun May 12 14:39:59 2019

From File /proc/version

Linux version 4.9.140-tegra (buildbrain@mobile-u64-3531) (gcc version 7.3.1 20180425

[linaro-7.3-2018.05 revision d29120a424ecfbc167ef90065c0eeb7f91977701] (Linaro GCC 7.3-2018.05) )

#1 SMP PREEMPT Wed Mar 13 00:32:22 PDT 2019

Bus Speed Results on Raspberry Pi 3 B+

#####################################################

BusSpeed armv8 64 Bit Sat May 25 19:43:56 2019

Reading Speed 4 Byte Words in MBytes/Second

Memory Inc32 Inc16 Inc8 Inc4 Inc2 Read

KBytes Words Words Words Words Words All

16 1147 1181 2896 4674 4852 4959

32 718 816 1440 2468 3555 4253

64 679 719 1340 2303 3407 4091

128 639 650 1194 2117 3267 4053

256 577 622 1113 2028 2630 3977

512 368 400 762 1421 2477 3529

1024 116 153 300 588 1132 2271

4096 105 140 275 531 1066 1915

16384 130 138 274 529 1083 1976

65536 130 139 272 528 1074 2015

End of test Sat May 25 19:44:07 2019

From File /proc/version

Linux version 4.14.79-v7+ (dc4@dc4-XPS13-9333)

(gcc version 4.9.3 (crosstool-NG crosstool-ng-1.22.0-88-g8460611))

#1159 SMP Sun Nov 4 17:50:20 GMT 2018

Blender Rendering Benchmark on Nvidia Jetson Nano

When I saw that it has a 64-bit Quad-core ARM A57 @ 1.43GHz CPU and a 128-core NVIDIA Maxwell @ 921MHz GPU one of the Use Case that came to my mind is, can this system be a cheap alternative for Rendering? I compared the Rendering Performance of Nvidia Nano with Processor Intel(R) Core(TM) i3-3110M CPU @ 2.40GHz, 2400 Mhz, 2 Core(s), 4 Logical Processor(s) and GPU AMD Radeon HD 7600M Series [ 600Mhz ].

For this Benchmark, I have used Blender v2.79b in Both the Systems. I have used the Sample File without changing any parameters Blender 2.74 – Fishy Cat. It took nearly Double Time in Jetson Nano to render the same Frame.

Jetson Nano Blender CPU Render

Time Taken : 05:13:12 Memory Used : 464.32MB

Jetson Nano Blender GPU Render [ CUDA Enabled ]

Time Taken : 05:26:48 Memory Used : 464.32MB

Intel(R) Core(TM) i3-3110M CPU Blender CPU Render

Time Taken : 02:47:34 Memory Used : 466.65MB

AMD Radeon HD 7600M GPU Render

Time Taken : 02:43:73 Memory Used : 465.22MB

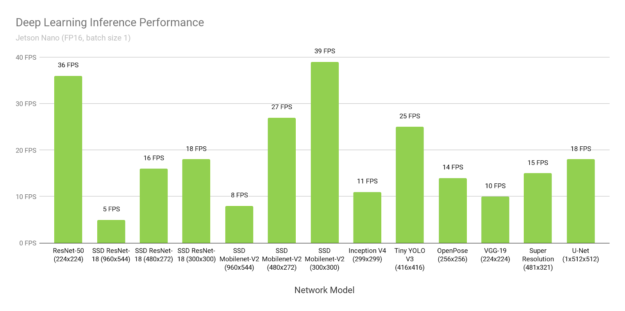

Deep Learning Inference Benchmarks [ Credit: Nvidia Blog ]

Jetson Nano can run a wide variety of advanced networks, including the full native versions of popular ML frameworks like TensorFlow, PyTorch, Caffe/Caffe2, Keras, MXNet, and others. These networks can be used to build autonomous machines and complex AI systems by implementing robust capabilities such as image recognition, object detection and localization, pose estimation, semantic segmentation, video enhancement, and intelligent analytics.

The figure shows results from inference benchmarks across popular models available online. See here for the instructions to run these benchmarks on your Jetson Nano. The inferencing used batch size 1 and FP16 precision, employing NVIDIA’s TensorRT accelerator library included with JetPack 4.2. Jetson Nano attains real-time performance in many scenarios and is capable of processing multiple high-definition video streams.

| Model | Application | Framework | NVIDIA Jetson Nano | Raspberry Pi 3 | Raspberry Pi 3 + Intel Neural Compute Stick 2 | Google Edge TPU Dev Board |

| ResNet-50 (224×224) | Classification | TensorFlow | 36 FPS | 1.4 FPS | 16 FPS | DNR |

| MobileNet-v2 (300×300) | Classification | TensorFlow | 64 FPS | 2.5 FPS | 30 FPS | 130 FPS |

| SSD ResNet-18 (960×544) | Object Detection | TensorFlow | 5 FPS | DNR | DNR | DNR |

| SSD ResNet-18 (480×272) | Object Detection | TensorFlow | 16 FPS | DNR | DNR | DNR |

| SSD ResNet-18 (300×300) | Object Detection | TensorFlow | 18 FPS | DNR | DNR | DNR |

| SSD Mobilenet-V2 (960×544) | Object Detection | TensorFlow | 8 FPS | DNR | 1.8 FPS | DNR |

| SSD Mobilenet-V2 (480×272) | Object Detection | TensorFlow | 27 FPS | DNR | 7 FPS | DNR |

| SSD Mobilenet-V2(300×300) | Object Detection | TensorFlow | 39 FPS | 1 FPS | 11 FPS | 48 FPS |

| Inception V4(299×299) | Classification | PyTorch | 11 FPS | DNR | DNR | 9 FPS |

| Tiny YOLO V3(416×416) | Object Detection | Darknet | 25 FPS | 0.5 FPS | DNR | DNR |

| OpenPose(256×256) | Pose Estimation | Caffe | 14 FPS | DNR | 5 FPS | DNR |

| VGG-19 (224×224) | Classification | MXNet | 10 FPS | 0.5 FPS | 5 FPS | DNR |

| Super Resolution (481×321) | Image Processing | PyTorch | 15 FPS | DNR | 0.6 FPS | DNR |

| Unet(1x512x512) | Segmentation | Caffe | 18 FPS | DNR | 5 FPS | DNR |

Nvidia Jetson Nano Review and FAQ

Nvidia Jetson Nano is an awesome device with a lot of processing power. No device is perfect and it has some Pros and Cons Involved in it.

- PROS

- Cheap Just 99$ or Rs8,899. More Processing Power and HW Resource Per Dollar compared to Raspberry Pi.

- 4 x USB 3.0 A. For Better Connectivity to Depth Cameras and External Accessories.

- 4K Video Processing Capability Unlike Raspberry Pi

- Multiple Monitor can be Hooked Up

- Selectable Power Source

- CONS

- Limited RAM Bandwidth of 25.6 GB/s Still Better than Raspberry Pi

- microSD as Main Storage Device Limits Disk Performance. Using the M.2 Key-E with PCIe x1 Slot for SSD or USB HDD / SSD can Solve this Problem, Check this Solution.

- Less Support for Softwares as Architecture is AArch64, many software will not work out of the box.

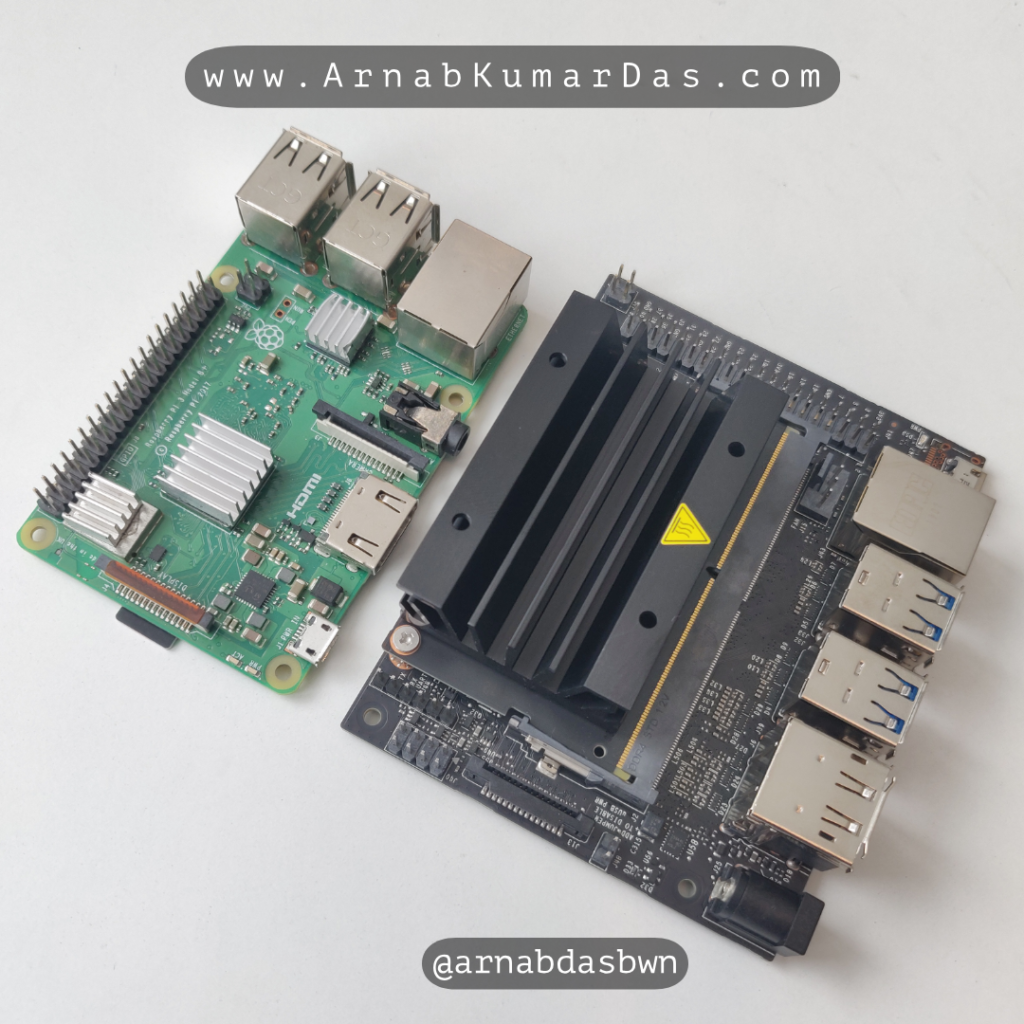

Raspberry Pi Vs Nvidia Jetson Nano

Size Difference Nvidia Jetson Nano and Raspberry Pi 3 B+

Nvidia Jetson Nano Vs Raspberry Pi 3 B+

About Seeed Studio >> Seeed is the IoT hardware enabler providing services over 10 years that empower makers to realize their projects and products. Seeed offers a wide array of hardware platforms and sensor modules ready to be integrated with existing IoT platforms and one-stop PCB manufacturing and Prototype PCB Assembly. Seeed Studio provides a wide selection of electronic parts including Arduino, Raspberry Pi and many different development board platforms. Especially the Grove System help engineers and makers to avoid jumper wires problems. Seeed Studio has developed more than 280 Grove modules covering a wide range of applications that can fulfill a variety of needs.

Definitely Yes, It Has a Better CPU, GPU and RAM. It is much fluid to use and the difference in performance is evident if you use then side by side. Due to the Extra RAM Web Browsing and other activities are more responsive than Raspberry Pi 3 B+.

Yes, It can be Used. The 4GB RAM makes it very usable. I highly suggest transferring the ROOT File System to an M.2 SSD. There will be less software available for AArch64 Architecture.

Nvidia Jetson nano Cost Rs8,899 Only.

Theoritically you can use this but Intel Processor or Other Nvidia PC GPU are Far Better.

As of Now not possible as there is no Driver Support form Nvidia. You can wait for their later releases. You can later connect a Volta GPU to this and can train your Neural Network.

Intel Wireless Card, SSD, PCI Based Expansion Devices anything that is PCI Based [ If Driver Exist ]. You will Need a PCI Extender as there is No Space Available for Bigger Devices other than Intel Wireless Card.

Thank You for Reading You are

Happy Making and Hacking 😊

Liked this content? Please Subscribe / Share to support this Website! 😇

11 Comments

Pawan · August 10, 2019 at 11:57 am

Hi Arnab

I have a question regarding the fps of a model , you mention that jetson nano has 472 GFLOPS but yet the SSD Mobilenet-V2(300×300) runs only at 39 FPS even though the model only uses 1 GFLOPS, shouldn’t the fps be higher.

Am i missing something.

Crazy Engineer · August 11, 2019 at 2:29 pm

input size feature size feature memory flops

150 x 150 1 x 1 x 128 1 GB 39 GFLOPs

300 x 300 1 x 1 x 128 4 GB 146 GFLOPs

450 x 450 1 x 1 x 128 10 GB 336 GFLOPs

600 x 600 2 x 2 x 128 17 GB 574 GFLOPs

750 x 750 2 x 2 x 128 27 GB 890 GFLOPs

900 x 900 2 x 2 x 128 39 GB 1 TFLOPs

Krutik · September 22, 2019 at 4:46 pm

Is Jetson Nano is suitable for Real time image processing using Opencv?

Crazy Engineer · October 16, 2019 at 12:59 am

Yes, it can do it quite easily but No Processing is Real-Time. I will call it Soft Realtime.

Leena Ladge · July 12, 2020 at 12:16 am

Is it possible to read core wise temperature at run time ? If not , what is the temperature estimation model suitable to calculate core temperature and use it for temperature based scheduling

Alexanderre · October 8, 2020 at 6:30 pm

How about RaspberryPi 4? Would love to see an updated comparison

Crazy Engineer · October 10, 2020 at 11:18 pm

Thanks buddy. I am planning to do so, but currently I don’t own a v4 so waiting for help.

ubi de feo · October 12, 2020 at 12:15 pm

On Raspberry Pi 4 64 bit, together with php and php-xml I also had to

sudo apt install libsdl-1.2-dev cmake cmake-data

Sara · March 1, 2021 at 9:44 am

Is Blender 2.74 – Fishy Cat used here a good enough Benchmark for understanding / comparing GPU rendering capabilities of Jetson Nano?Google Coral?

Crazy Engineer · March 3, 2021 at 6:20 pm

It may not be able to give you an absolute comparison. I recommend you to run multiple GPU Benchmark Algorithm

Alan · March 31, 2022 at 12:24 pm

I am building a model that will use TensorFlow deep neural network, we will be analyzing weather pattern data and applying corrections to the model in order to study and control the air Flow motion. I am a new A.I , what do think would be better suited as I have a choice between 2 platforms at roughly the same cost a Jetson Nano 4GB Developer Kit or a Jason TX1. I thank you for any input. alan